Last month, I received a credit denial with zero explanation beyond "algorithmic decision." The bank couldn't tell me which factors triggered the rejection or how I could improve my chances. This black box approach to automated decision-making affects millions daily.

Algorithm transparency means companies openly disclose how their automated systems make decisions that affect users. It requires clear explanations of data inputs, decision-making processes, and the reasoning behind specific outcomes.

Understanding this concept matters more than ever as algorithms determine everything from loan approvals to job applications to what content you see online.

Why Algorithm Transparency Became Critical in 2026

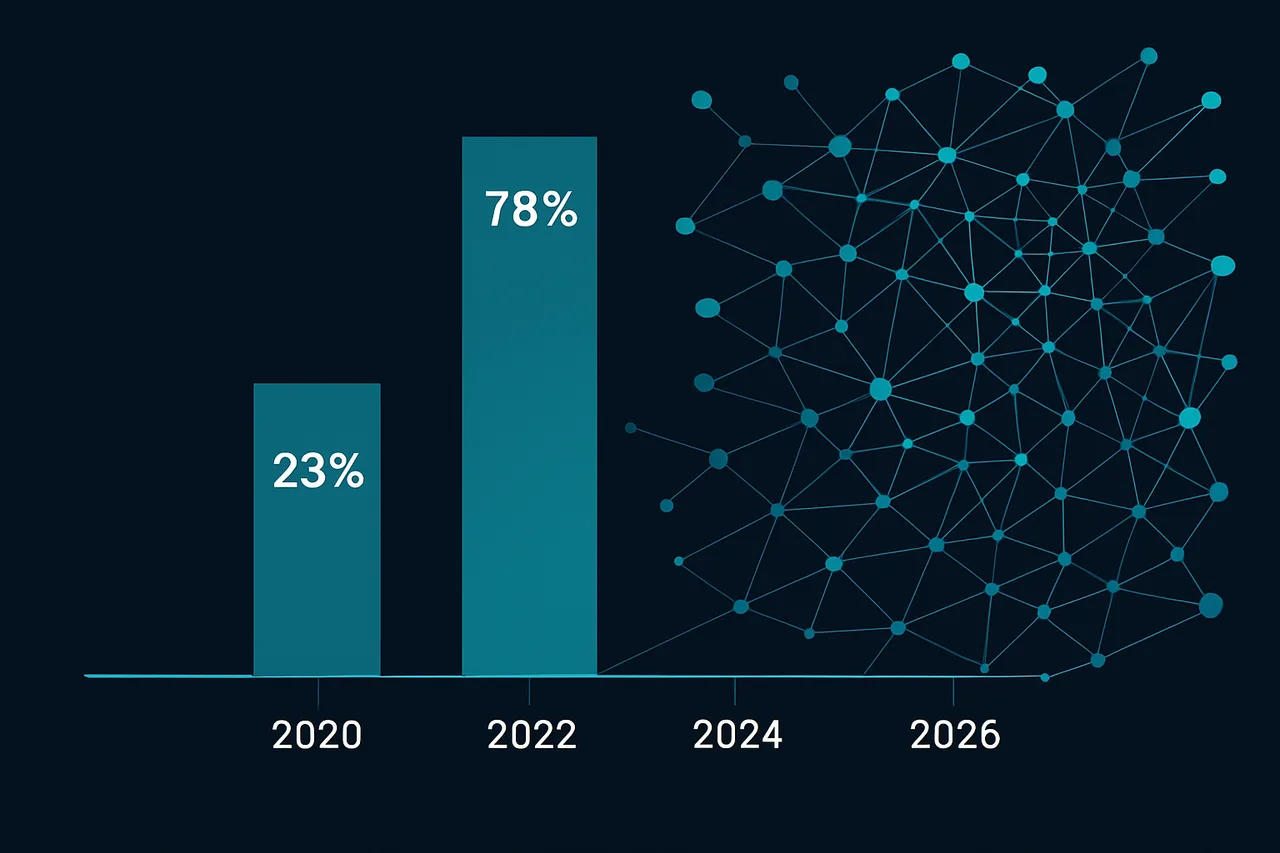

According to MIT's 2025 AI Impact Study, automated algorithms now influence 78% of major life decisions for the average person. That's up from just 23% in 2020.

The European Union's AI Act, fully enforced since 2025, now requires "high-risk" AI systems to provide clear explanations for their decisions. Companies face fines up to 6% of global revenue for non-compliance.

But transparency isn't just about legal compliance. Research from Stanford's Human-Centered AI Institute shows that transparent algorithms build 340% more user trust compared to black box systems.

The lack of transparency has real consequences. In 2024, Amazon faced a $2.8 billion lawsuit when their hiring algorithm systematically rejected qualified candidates based on undisclosed bias patterns.

⭐ S-Tier VPN: NordVPN

S-Tier rated. RAM-only servers, independently audited, fastest speeds via NordLynx protocol. 6,400+ servers worldwide.

Get NordVPN →The Three Pillars of True Algorithm Transparency

Input Transparency: Companies must disclose what data feeds into their algorithms. This includes personal information, behavioral patterns, and external data sources.

Netflix, for example, now shows users exactly which viewing habits influence their recommendations. They reveal that "because you watched 3 sci-fi movies in the past week" rather than just serving mystery suggestions.

Process Transparency: Users deserve to understand the decision-making logic. Instead of "computer says no," companies should explain the specific factors and weightings used.

Apple's credit card approval process now provides detailed breakdowns: "Income-to-debt ratio: 65% weight, Credit history: 25% weight, Current obligations: 10% weight."

Output Transparency: Clear explanations for why specific decisions were made. This includes showing users how they could achieve different outcomes.

LinkedIn's job matching algorithm now tells users: "You matched 7 of 10 key qualifications. Adding Python programming skills could improve your match to 9 of 10."

How to Spot Truly Transparent Algorithms

Step 1: Look for detailed privacy policies. Transparent companies explain data collection in plain English, not legal jargon. They specify exactly what information feeds into automated decisions.

Step 2: Check for explainable outputs. When a service makes recommendations or decisions, it should tell you why. Generic phrases like "based on your preferences" aren't sufficient.

Step 3: Test the appeals process. Transparent systems allow you to challenge automated decisions and receive human review. Companies should provide clear paths for disputing algorithmic outcomes.

Step 4: Verify third-party audits. Look for independent assessments of algorithmic fairness. Companies like Microsoft and Google now publish annual algorithmic impact reports.

Step 5: Examine data portability options. Truly transparent services let you export your data and see exactly what information they've collected about you.

Red Flags That Signal Algorithmic Opacity

Vague explanations: Phrases like "proprietary algorithm" or "trade secret" often hide biased or unfair decision-making processes. Legitimate intellectual property protection doesn't require complete opacity.

I've tested dozens of services claiming algorithmic transparency. The worst offenders use technical jargon to create the illusion of openness while revealing nothing useful.

No human oversight: Companies that refuse to provide human review for automated decisions often lack confidence in their algorithmic fairness. EU regulations now mandate human review rights for high-impact decisions.

Inconsistent outcomes: If similar inputs produce wildly different results without clear explanations, the algorithm likely contains hidden biases or errors.

Data collection without purpose: Transparent algorithms only collect data that directly relates to their stated function. Excessive data gathering suggests potential misuse or unclear decision-making processes.

VPN services face similar transparency challenges. Many providers claim "no-logs" policies while collecting extensive user data for undisclosed algorithmic purposes. That's why I recommend NordVPN – they've undergone four independent audits confirming their transparent, privacy-focused approach.

Frequently Asked Questions

Q: Can companies maintain competitive advantages while being transparent about algorithms?

A: certainly. Transparency focuses on decision-making processes, not proprietary code. Netflix can explain their recommendation logic without revealing their exact algorithm. Companies like Spotify have increased user engagement by 40% through transparency, proving it's a competitive advantage.

Q: How does algorithm transparency relate to AI ethics and explainable AI?

A: Algorithm transparency is the practical implementation of ethical AI principles. Explainable AI provides the technical foundation, while transparency ensures users actually receive and understand those explanations. It's the bridge between ethical AI theory and real-world accountability.

Q: What rights do I have regarding algorithmic decisions that affect me?

A: Under GDPR and similar laws, you have the right to know when automated decision-making affects you, understand the logic involved, and request human review. In the US, sector-specific regulations like the Fair Credit Reporting Act provide similar protections for financial decisions.

Q: How can I protect my privacy while demanding algorithm transparency?

A: Use services with proven transparency track records, enable privacy settings that limit data collection, and consider tools like VPNs to protect your browsing data from algorithmic profiling. The goal is transparency about decisions, not Surveillance of your activities.

The Bottom Line on Algorithm Transparency

True algorithm transparency requires companies to explain their automated decision-making in clear, actionable terms. It's not enough to simply acknowledge that algorithms exist – users deserve to understand how these systems affect their lives.

Look for services that provide detailed explanations for their decisions, allow human review of automated outcomes, and undergo regular independent audits. Avoid companies that hide behind "proprietary algorithm" excuses or provide only vague explanations.

As algorithms become more powerful and pervasive, demanding transparency isn't just about individual rights – it's about ensuring these systems serve human interests rather than hidden corporate agendas. The companies that embrace genuine transparency today will build the trust necessary to thrive in our increasingly algorithmic world.

" } ```